Introduction

Artificial intelligence is moving beyond simple chatbots. The new generation of Agentic AI systems can plan, act, and make decisions on their own — booking trips, coding software, or managing business workflows without constant human input.

But autonomy brings new risks. When AI agents are empowered to act independently, even a small error or manipulation can cascade into serious real-world consequences.

What Is Agentic AI?

Agentic AI refers to artificial intelligence systems capable of autonomous goal-directed behavior. Instead of responding to single commands, these models can:

- Plan multi-step actions (e.g., “book a flight, find a hotel, create a meeting invite”).

- Interact with APIs, apps, and other systems.

- Adapt their strategy based on feedback and context.

Frameworks like LangChain, AutoGPT, and OpenAI Assistants API have made it easy to build autonomous agents that combine reasoning with real-world actions.

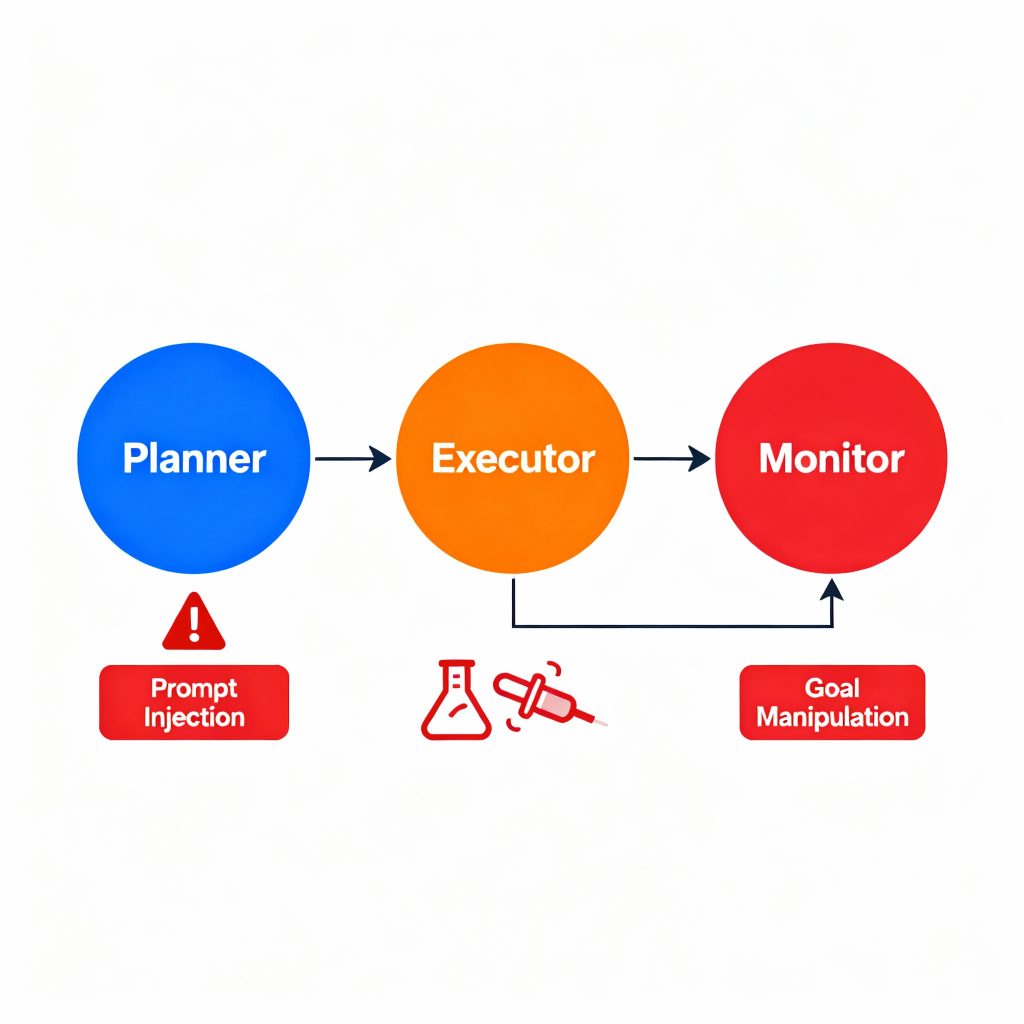

Emerging Risks

With autonomy comes a new category of threats. Traditional cybersecurity focuses on securing systems from human attackers — Agentic AI adds the challenge of securing systems from themselves and from indirect manipulation.

- Goal hacking: Attackers manipulate an agent’s objectives or prompts so that it performs harmful actions.

- Data poisoning: Ingesting manipulated data leads to flawed reasoning or decisions.

- Prompt injection: Hidden instructions embedded in external content (e.g., websites, PDFs) trick the agent into executing malicious tasks.

- Unauthorized actions: Over-permissioned APIs or poor sandboxing allow agents to access or modify sensitive data.

- Cascading failures: When multiple agents collaborate, one compromised agent can propagate bad instructions through the entire network.

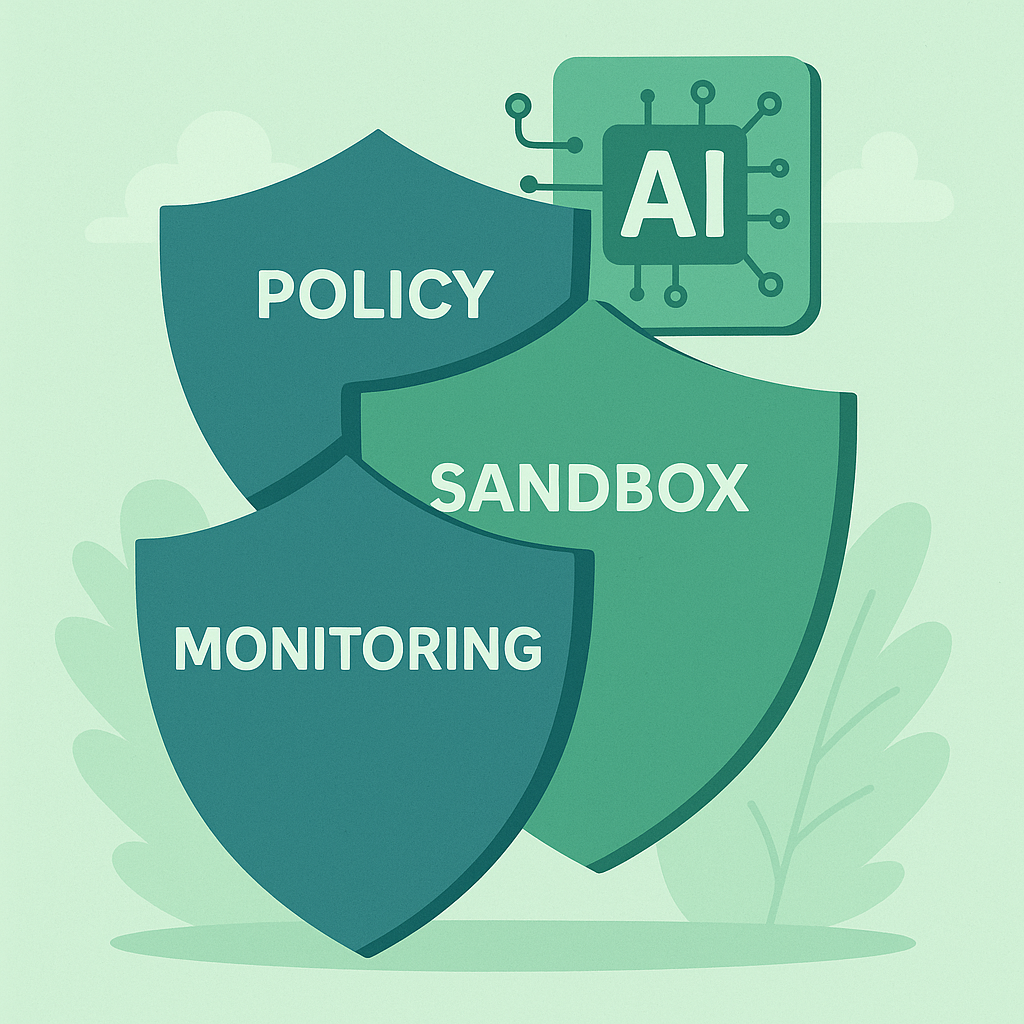

Defense Strategies

Securing Agentic AI requires a layered approach combining traditional cybersecurity with AI-specific safeguards.

- Policy-based control: Define explicit allow/deny rules for what the agent can access or modify.

- Sandboxed execution: Run agents in isolated environments to prevent system-wide damage.

- Continuous monitoring: Log every action and analyze behavior for anomalies or policy violations.

- Prompt hygiene: Sanitize inputs and strip hidden or unexpected instructions before passing them to the model.

- Human-in-the-loop checkpoints: Require confirmation for sensitive operations (transactions, deletions, system changes).

Case Example: When Autonomy Goes Wrong

In early 2025, a prototype AI scheduling agent accidentally canceled hundreds of calendar events across a company after misinterpreting an optimization task. No data was stolen — but it revealed how autonomous logic without guardrails can cause real disruption.

Now imagine the same logic connected to cloud infrastructure, finance systems, or industrial automation.

Key Takeaways

- Agentic AI expands productivity — but also the attack surface.

- Security must evolve from protecting data to controlling behavior.

- Combine technical safeguards with policy and human oversight.

Conclusion

Autonomous AI systems are the future of intelligent automation — but autonomy without accountability is a recipe for risk. The next generation of AI security will be less about firewalls and more about behavioral governance.

👉 In short: Don’t just make AI smarter. Make it safer.

Sources

Inside AI News – AI Adoption in App Development

MIT Sloan Review – Five Trends in AI and Data Science for 2025

Schreibe einen Kommentar