Introduction

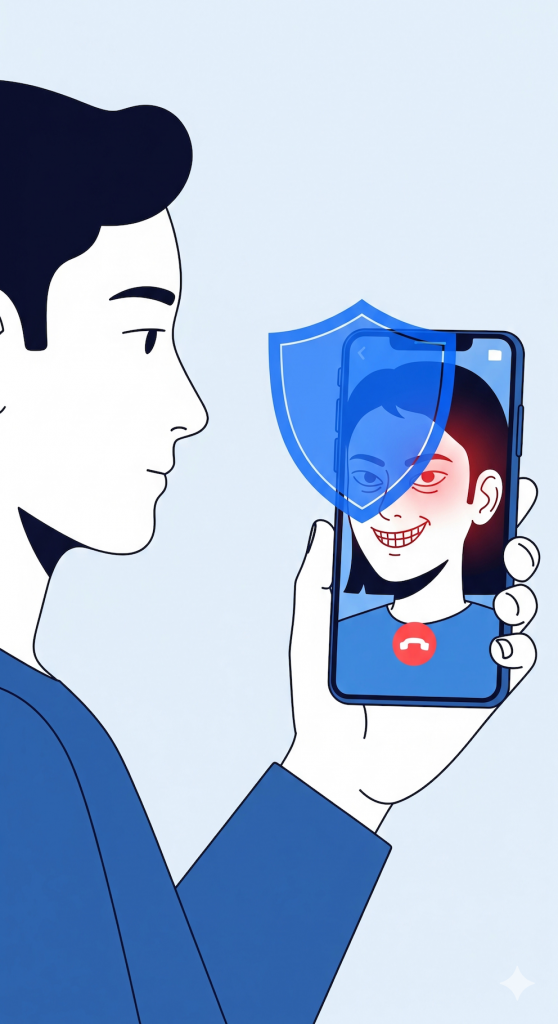

Imagine getting a video call from your CEO, asking you to transfer money urgently. You see their face, you hear their voice – everything looks real. But what if it isn’t?

Welcome to the age of deepfake scams, where artificial intelligence makes it possible to mimic people with stunning accuracy. What started as entertainment tech has now become one of the most dangerous tools in the hands of cybercriminals.

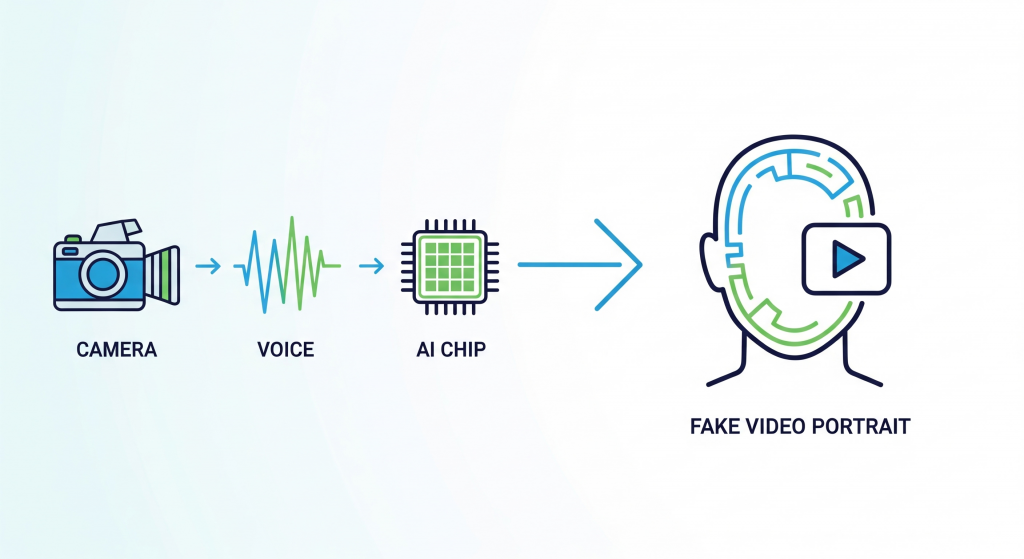

How Deepfake Scams Work

Deepfakes are powered by generative AI, trained on thousands of images and audio samples. Using deep learning models, AI can reconstruct faces and voices in real time.

- Face swaps: AI replaces one person’s face with another in videos.

- Voice cloning: Algorithms mimic speech patterns from a few minutes of recorded audio.

- Full video synthesis: Entirely new fake videos can be generated from scratch.

This technology has legitimate uses – film dubbing, accessibility, and education – but criminals use it for fraud, blackmail, and disinformation.

Real-World Cases of Deepfake Scams

- 2024 – UK energy firm scam: Criminals used AI voice cloning to impersonate a CEO and tricked an employee into transferring €220,000.

- 2025 – Deepfake job interviews: Attackers used fake video identities to apply for remote tech jobs, gaining access to sensitive systems.

- Ongoing – Social media disinformation: Fake political speeches spread online to manipulate public opinion.

How to Protect Yourself

The good news: with awareness and the right checks, you can defend against deepfake scams.

- Always verify requests: If your “boss” asks for money, confirm via a second channel (e.g., phone call, in-person).

- Look for inconsistencies: Blinking patterns, unnatural speech rhythms, or background glitches can reveal a deepfake.

- Use detection tools: Emerging AI tools can analyze videos for signs of manipulation.

- Awareness training: Teach employees about deepfake risks – treat it like phishing awareness 2.0.

Key Takeaways

- Deepfakes are the next evolution of social engineering.

- Fraudsters use them for scams, disinformation, and identity theft.

- Awareness and multi-channel verification are the strongest defenses today.

Conclusion

Deepfakes are no longer just a futuristic concept – they are here, and they are being weaponized. By combining human skepticism with technical defenses, you can avoid becoming the victim of the next AI-driven scam.

👉 The rule is simple: if it looks too real to be true, double-check it.